Science

ETRI Proposes AI Standards for Safety and Trustworthiness

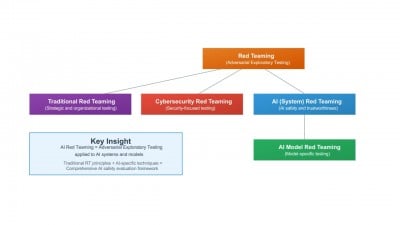

The Electronics and Telecommunications Research Institute (ETRI) has taken a significant step in the field of artificial intelligence by proposing two new international standards aimed at enhancing the safety and trustworthiness of AI systems. These proposals, introduced to the International Organization for Standardization (ISO/IEC), include the “AI Red Team Testing” standard and the “Trustworthiness Fact Label (TFL)” standard.

The AI Red Team Testing standard is designed to proactively identify potential risks within AI systems. This initiative aims to implement rigorous testing protocols that can uncover vulnerabilities before they are exploited. By establishing this standard, ETRI seeks to create a more secure environment for AI deployment, addressing concerns that have arisen as AI technology becomes increasingly integrated into various sectors.

On the other hand, the Trustworthiness Fact Label (TFL) standard focuses on enhancing consumer understanding of AI systems. This label will provide clear and concise information regarding the authenticity and reliability of AI applications. By making this information accessible, ETRI hopes to promote greater consumer confidence in AI technologies, ensuring that users can make informed decisions based on the trustworthiness of the systems they interact with.

Global Impact and Development

The proposals come as part of ETRI’s ongoing commitment to advancing technological standards on a global scale. With the support of ISO/IEC, ETRI has begun full-scale development of these standards, which are expected to play a pivotal role in shaping the future of AI governance. This initiative highlights the importance of international collaboration in establishing frameworks that can effectively manage the complexities associated with AI.

ETRI, based in South Korea, has been at the forefront of research and development in electronics and telecommunications. By proposing these standards, the institute not only reinforces its leadership in the field but also addresses the urgent need for robust regulatory measures in AI. The organization’s proactive approach aims to set a benchmark that could influence global practices and enhance safety in AI technologies.

A Future of Trustworthy AI

The introduction of the AI Red Team Testing standard and the TFL is particularly timely, as the global conversation around AI safety intensifies. Concerns regarding data privacy, algorithmic bias, and the overall ethical implications of AI have prompted calls for more stringent regulations. ETRI’s initiative is expected to contribute significantly to this discourse, providing a framework that can be adopted by organizations worldwide.

As ETRI continues to develop these standards, the institute invites stakeholders from various sectors to participate in discussions regarding their implementation. By fostering an inclusive dialogue, ETRI aims to ensure that the standards reflect a comprehensive understanding of the challenges and opportunities presented by AI.

In conclusion, the proposed standards by ETRI represent a crucial step toward ensuring that AI technologies are safe, reliable, and trustworthy. As the landscape of AI continues to evolve, these initiatives could serve as a foundational element in building a more secure digital future.

-

Sports2 weeks ago

Sports2 weeks agoSteve Kerr Supports Jonathan Kuminga After Ejection in Preseason Game

-

Business2 weeks ago

Business2 weeks agoTyler Technologies Set to Reveal Q3 2025 Earnings on October 22

-

Politics2 weeks ago

Politics2 weeks agoDallin H. Oaks Assumes Leadership of Latter-day Saints Church

-

Science2 weeks ago

Science2 weeks agoChicago’s Viral ‘Rat Hole’ Likely Created by Squirrel, Study Reveals

-

Lifestyle2 weeks ago

Lifestyle2 weeks agoKelsea Ballerini Launches ‘Burn the Baggage’ Candle with Ranger Station

-

Entertainment2 weeks ago

Entertainment2 weeks agoZoe Saldana Advocates for James Cameron’s Avatar Documentary

-

Lifestyle2 weeks ago

Lifestyle2 weeks agoDua Lipa Celebrates Passing GCSE Spanish During World Tour

-

Health2 weeks ago

Health2 weeks agoCommunity Unites for Seventh Annual Mental Health Awareness Walk

-

Health2 weeks ago

Health2 weeks agoRichard Feldman Urges Ban on Menthol in Cigarettes and Vapes

-

World2 weeks ago

World2 weeks agoD’Angelo, Iconic R&B Singer, Dies at 51 After Cancer Battle

-

Business2 weeks ago

Business2 weeks agoMLB Qualifying Offer Jumps to $22.02 Million for 2024

-

Sports2 weeks ago

Sports2 weeks agoPatriots Dominate Picks as Raiders Fall in Season Opener