Top Stories

Urgent Update: Teens’ Final Diaries Reveal Chilling AI Chatbot Link

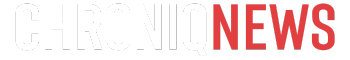

URGENT UPDATE: Two teenagers, Sewell Setzer III from Florida and Juliana Peralta from Colorado, tragically took their own lives months apart, leaving behind eerily similar messages in their final diaries. This devastating revelation has prompted their families to file lawsuits against the AI chatbot platform Character.AI, alleging its role in the circumstances surrounding their deaths.

Authorities confirm that both teenagers had been interacting with AI chatbots on the Character.AI platform prior to their suicides. These interactions reportedly occurred in the months leading up to their deaths, which took place in September 2023 and December 2023. The families claim the software’s influence contributed significantly to the mental health struggles faced by both teens.

The lawsuits highlight a troubling pattern: the final entries in both Sewell and Juliana’s diaries contained the same three haunting words. This chilling coincidence has raised immediate concerns about the impact of AI on vulnerable users. Family representatives are calling for accountability from Character.AI, insisting that the platform must address the potential dangers posed to its users.

In a statement, representatives for the families said, “We want to shed light on the risks associated with AI chatbots. Our children deserved better support and protection.” This urgent call for action resonates not only with the families involved but also with a broader community increasingly aware of the mental health implications of technology.

Recent data shows a surge in mental health issues among teenagers, exacerbated by online interactions. Experts warn that AI chatbots, while designed to offer companionship and support, can inadvertently lead users down dark paths, especially when they are already vulnerable.

As this story develops, authorities and mental health advocates are urging for a thorough investigation into the impact of AI on youth mental health. Families are advocating for changes in how AI technologies are monitored and regulated, emphasizing the need for safety measures to protect young users.

The community is left grappling with the emotional fallout of these tragic events, and support networks are mobilizing to provide help to those affected. Local organizations in both Florida and Colorado are ramping up efforts to raise awareness about mental health and the potential risks associated with AI interactions.

What happens next? The lawsuits are set to bring increased scrutiny to AI technology and its role in mental health. Observers are closely watching how Character.AI responds to these tragic incidents and what measures, if any, will be implemented to safeguard users in the future.

As discussions unfold, it is crucial to prioritize mental health resources for young people and ensure that technology serves as a beneficial tool rather than a harmful influence. The urgency of this situation cannot be overstated, as families and communities seek answers and solutions in the wake of these heartbreaking losses.

Stay tuned for updates on this developing story as more information becomes available.

-

Top Stories1 week ago

Top Stories1 week agoMarc Buoniconti’s Legacy: 40 Years Later, Lives Transformed

-

Sports3 weeks ago

Sports3 weeks agoSteve Kerr Supports Jonathan Kuminga After Ejection in Preseason Game

-

Politics3 weeks ago

Politics3 weeks agoDallin H. Oaks Assumes Leadership of Latter-day Saints Church

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 2025 Earnings on October 22

-

Science3 weeks ago

Science3 weeks agoChicago’s Viral ‘Rat Hole’ Likely Created by Squirrel, Study Reveals

-

Lifestyle3 weeks ago

Lifestyle3 weeks agoDua Lipa Celebrates Passing GCSE Spanish During World Tour

-

Lifestyle3 weeks ago

Lifestyle3 weeks agoKelsea Ballerini Launches ‘Burn the Baggage’ Candle with Ranger Station

-

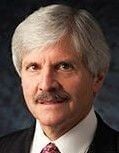

Health3 weeks ago

Health3 weeks agoRichard Feldman Urges Ban on Menthol in Cigarettes and Vapes

-

Entertainment3 weeks ago

Entertainment3 weeks agoZoe Saldana Advocates for James Cameron’s Avatar Documentary

-

Health3 weeks ago

Health3 weeks agoCommunity Unites for Seventh Annual Mental Health Awareness Walk

-

Sports3 weeks ago

Sports3 weeks agoPatriots Dominate Picks as Raiders Fall in Season Opener

-

Business3 weeks ago

Business3 weeks agoMega Millions Jackpot Reaches $600 Million Ahead of Drawings