Science

Local LLM and NotebookLM Integration Boosts Research Efficiency

Integrating NotebookLM with a local Large Language Model (LLM) has led to significant improvements in digital research workflows. This innovative approach combines the organizational strengths of NotebookLM with the speed and control offered by local LLMs, creating an efficient system for handling complex projects. The experiment transformed a traditional research process into a streamlined and productive experience.

Revolutionizing Research Workflows

Many professionals encounter frustration when managing extensive research projects. While tools like NotebookLM excel in organizing information and producing source-based insights, they often lack the creative flexibility and speed provided by local LLMs. The integration of these two technologies allows users to harness the best of both worlds.

The local LLM, set up in LM Studio, delivers the speed and privacy needed for effective research. Users can adjust model parameters and switch between different models without incurring API costs. Despite its advantages, a standalone local LLM struggles to provide the contextual accuracy necessary for in-depth research. Therefore, a hybrid approach became essential for maximizing productivity.

Streamlined Integration Process

The integration process begins with the local LLM generating an overview of a new subject, such as self-hosting via Docker. The user first queries the LLM for a comprehensive overview, which includes key aspects like security practices and networking fundamentals. This structured output is then copied into a NotebookLM project.

NotebookLM contains a wealth of sources, including PDFs, YouTube transcripts, and blog posts relevant to the subject. By treating the overview from the local LLM as a source, users enhance NotebookLM’s capabilities. This method creates a robust knowledge base that merges the accuracy of NotebookLM with the rapid generation of content from the local LLM.

Once the local LLM’s overview is integrated, users can pose complex questions to NotebookLM and receive prompt, relevant answers. This functionality significantly reduces the time spent on research tasks, allowing users to focus on deeper analysis.

Another feature that enhances this workflow is the audio overview generation. By clicking the Audio Overview button, users receive a personalized audio summary of their research, which can be listened to while away from their desks. This feature saves valuable time and allows for more efficient multitasking.

Additionally, NotebookLM’s source checking and citation capabilities provide assurance regarding the accuracy of information. Users can easily trace facts back to their original sources, avoiding the need for extensive manual verification. This efficient method allows researchers to validate their findings in a fraction of the time it typically takes.

The combination of a local LLM and NotebookLM not only improves speed but also enhances control over data and research processes. What started as a simple experiment has evolved into a transformative approach to managing complex projects. This method empowers users to break free from the limitations of solely cloud-based or local workflows.

As professionals increasingly seek to maximize productivity while ensuring data privacy, this integration serves as a new model for research environments. For those serious about enhancing their research capabilities, this pairing represents a significant advancement in how projects can be approached and managed.

To explore how to leverage local LLMs further, interested readers can refer to dedicated resources that outline various productivity workloads that can be streamlined with this technology.

-

Top Stories2 weeks ago

Top Stories2 weeks agoMarc Buoniconti’s Legacy: 40 Years Later, Lives Transformed

-

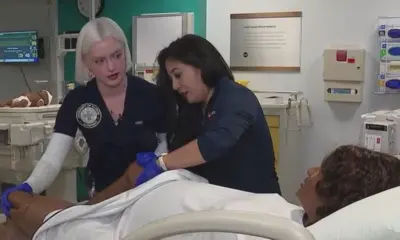

Health2 weeks ago

Health2 weeks agoInnovative Surgery Restores Confidence for Breast Cancer Patients

-

Sports3 weeks ago

Sports3 weeks agoSteve Kerr Supports Jonathan Kuminga After Ejection in Preseason Game

-

Science4 weeks ago

Science4 weeks agoChicago’s Viral ‘Rat Hole’ Likely Created by Squirrel, Study Reveals

-

Entertainment4 weeks ago

Entertainment4 weeks agoZoe Saldana Advocates for James Cameron’s Avatar Documentary

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 2025 Earnings on October 22

-

Politics4 weeks ago

Politics4 weeks agoDallin H. Oaks Assumes Leadership of Latter-day Saints Church

-

Lifestyle3 weeks ago

Lifestyle3 weeks agoKelsea Ballerini Launches ‘Burn the Baggage’ Candle with Ranger Station

-

Business3 weeks ago

Business3 weeks agoZacks Research Downgrades Equinox Gold to Strong Sell Rating

-

Health2 weeks ago

Health2 weeks ago13-Year-Old Hospitalized After Swallowing 100 Magnets

-

Lifestyle4 weeks ago

Lifestyle4 weeks agoDua Lipa Celebrates Passing GCSE Spanish During World Tour

-

Health4 weeks ago

Health4 weeks agoCommunity Unites for Seventh Annual Mental Health Awareness Walk